All rights reserved. Made with love using Kallyas theme.

A comparison of model-based and model-free offline Reinforcement learning methods for Electric Vehicle smart charging optimization

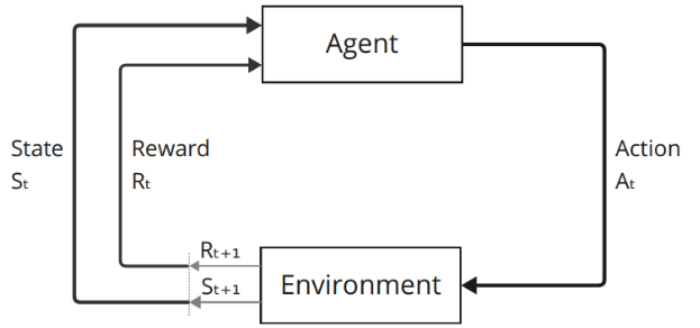

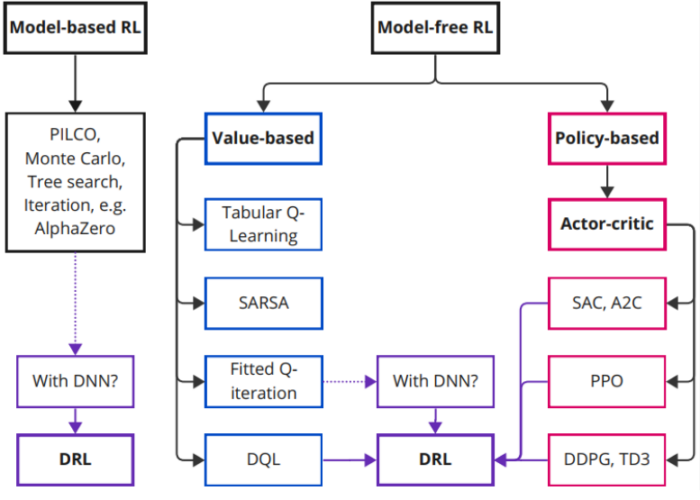

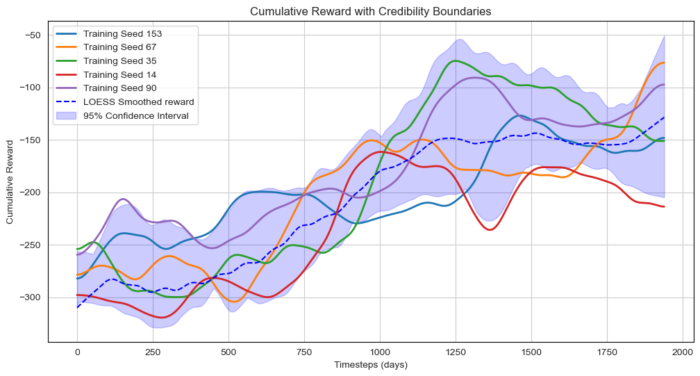

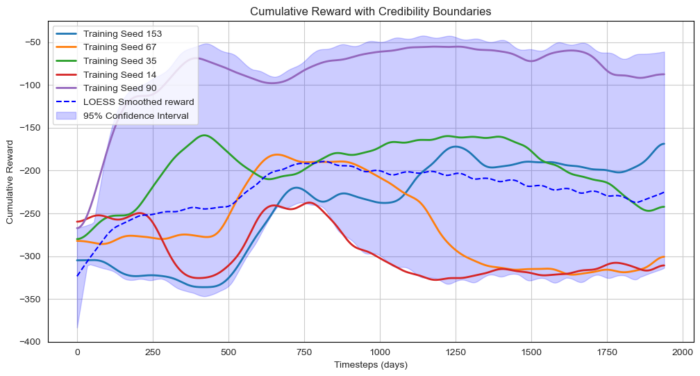

The rise in electric vehicles (EVs) deployed is increasing peak power loads on grids, causing stability issues and higher expansion costs. Smart charging solutions aim to balance EV charging by integrating data from EV stations, building loads, and solar power. Classical Model Predictive Controllers (MPCs) can address constraints but struggle with non-linearity in real system dynamics. Reinforcement learning (RL) excels at managing uncertainties such as fluctuating demand and complex dynamic interactions but struggles with constraint satisfaction. Therefore, this research proposes a novel model-based RL (RLMPC) approach using Behavioral Cloning (BC) to predict the charging strategy, where MPC is used as a policy estimator to constrain the RL solution. A model-free Deep RL (DRL) actor-critic model with Proximal Policy Optimization (PPO) was applied to serve as a basis for comparison. Both approaches

effectively optimized EV charging operations, yielding consistent savings potential of approximately 20% and adaptability across

various operational scenarios.

- CLIENT Kropman

- YEAR 2024

- WE DID Research, Software Engineering

- PARTNERS Andrey Poddubnyy, Shalika Walker, Phuong Nguyen

- CATEGORY Research and Simulation

- TAGS